There's plenty of tools out there that can warn you about problematic things they notice, but that's rather different from letting you know if an API is good quality. Not triggering any warnings doesn't mean it's great, it just means it's not bad. Thankfully, two tools have popped up to take care of that. API Insights by Treblle, and Rate my API by Zuplo.

When I was helping to build Spectral (and Speccy before that) the goal was to build a tool which could help you stick to certain conventions, use certain standards, and avoid pitfalls. This was coming from an API Governance angle, helping people doing API Design Reviews not have to keep checking for the same problems, making sure the right HTTP authentication method was being used, using JSON:API not rando-JSON, etc. It always felt very "don't do that" centric, and what I really wanted to see was something more like the SSL Test by SSL Labs, which gave you a A-F rating and let you know what you were doing well too.

Both API Insights and Rate my OpenAPI aim to do this, and whilst they are very new tools they are both showing a lot of promise. Let's have a play with them and see how they handle the Tree Tracker API a rag-tag crew of volunteers have built to power Protect Earth's reforestation work.

API Insights

Getting started with API Insights is easy; you can either go to apiinsights.io or grab the API Insights app from the Mac Store. The idea is that you load an OpenAPI document into the tool, and it will inspect not just the OpenAPI itself, but the actual hosted API listed in the servers array, which helps give feedback on server settings controlled by the implementation that might not live in OpenAPI.

At first I had a bit of a fumble trying to get my OpenAPI document into the tool, as I've split it up using loads of $ref's and it wanted me to provide one file. Thankfully, Redocly CLI helps bundle OpenAPI documents into a single YAML/JSON file and then it's easy to shove that into either the web app or the Mac app.

$ redocly bundle openapi.yaml -o openapi.bundled.yaml

With the document loaded in, I just needed to hit "Get Insights" and a few moments later I had my API score.

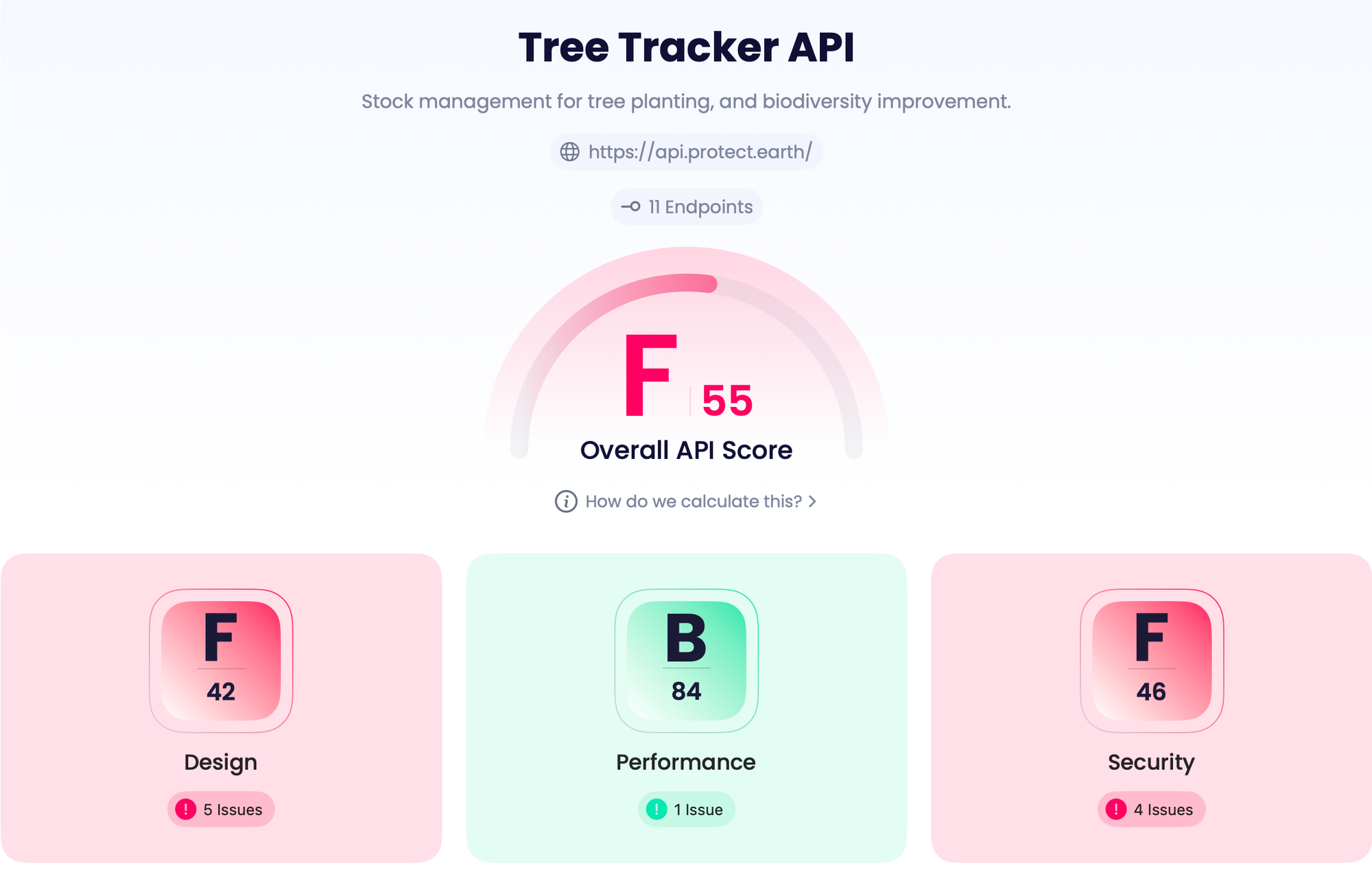

Oh dear, I built an API that this tool hates!

What does API Insight score your API on?

There are three sections: Design, Performance, Security. I'll start with security, because its the least controversial.

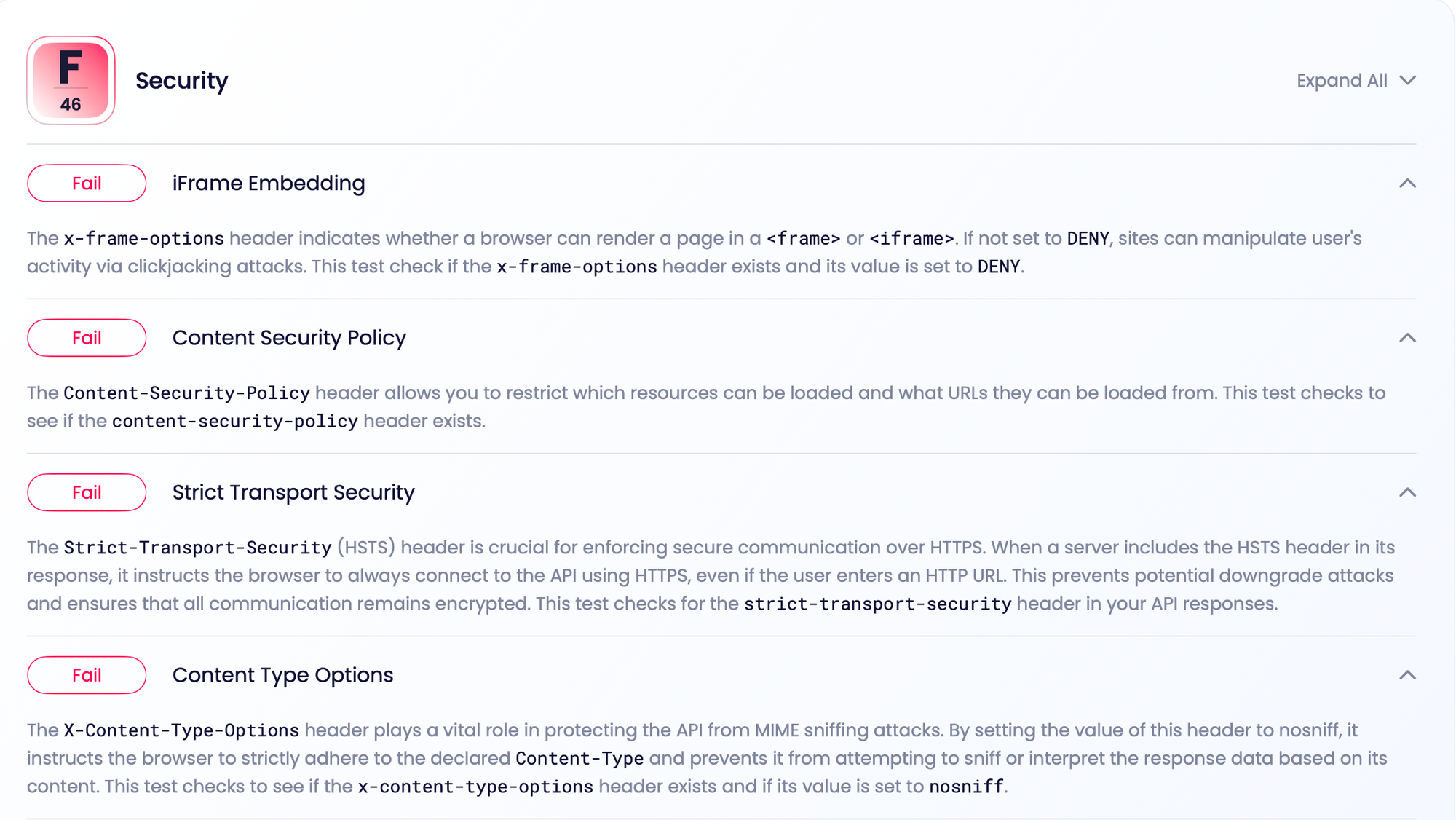

These are all the things that I always forget to do with an API, that should probably be enabled by more Web Application Frameworks by default, especially the ones specifically aiming to be API-centric, or have an API mode.

How much do they matter? I'm not sure, the tool doesn't educate my on why I need to do any of this, which could be as simple as linking to MDN to explain things like why I need the Content-Security-Policy header.

There are definitely a lot of people who will want to know why they need to do things, especially if it involves a breaking change to their API, but just as equally there are plenty of people who will dive in and start doing this stuff anyway. I found myself in the "rushing to do it regardless" camp because I want the high score, and this is a large part of why API Scoring can help in an API Governance program, because however much you'd like to deny it, people are motivated by shiny stickers.

Some of the checks will need tweaking, because they are pushing you towards slightly outdated practices. For example, it recommends the x-frame-options header, which has two issues:

x-headers should never be used for anything and are being deprecated with a vengeance across the world of HTTP.- The

Content-Security-PolicyHTTP header has a frame-ancestors directive which replacesx-frame-options.

I mentioned this to the team and they're going to see how they implement support for both. It could be a case of giving you an A for using the modern approach, a B for using the older approach, and a F for using nothing. Either way it's good to get people thinking about these headers.

Another check was Strict Transport Security (HSTS), and it's not entirely clear where API Insights is expecting to see those headers. From the description of the check I think they are looking to see it in my actual OpenAPI document, like this:

responses:

'200':

description: successful operation

headers:

Strict-Transport-Security:

schema:

type: string

example: max-age=31536000; includeSubDomains

I gotta be honest, I'm never going to do that. Not even if I make it a shared reference and $ref it into every response, I just... would never ever bother for any reason. not only does this feel far to much like a infrastructure implementation detail to be in OpenAPI, but if I pop this header into the OpenAPI it's going to confuse my OpenAPI-based contract testing which is now expecting a header that hasn't appeared. I'd need to move it into my source code instead of letting the server (nginx, Cloudflare, etc) add it... which... maybe I should?

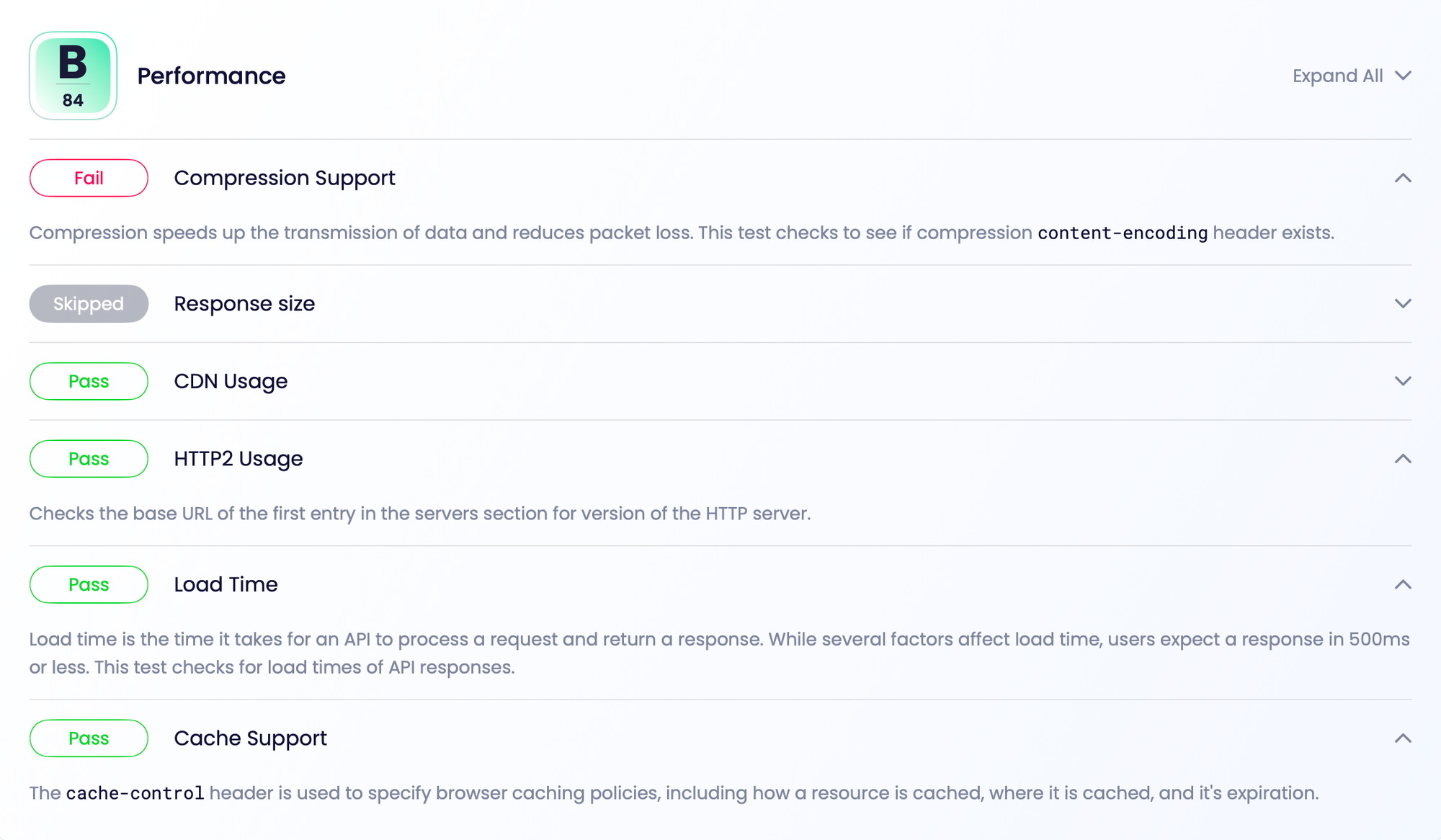

Performance has a great list of checks:

- Cache Support

- CDN Usage

- Compression Support

- Load Time

- Response size

- HTTP/2 Usage

Thanks to using Cloudflare it looks like I got a great score without having to do anything.

I always forget to add content-encoding to APIs I build, and stupidly just fire full-fat JSON around worsening the 4% of global CO2eq emissions caused by the internet. I should be using gzip or br to squash my response sizes! Thank you for reminding me API Insights. Learn more about content encoding in APIs from HTTP Toolkit, and google around until you find some sort of middleware or copy/paste code on Stack Overflow.

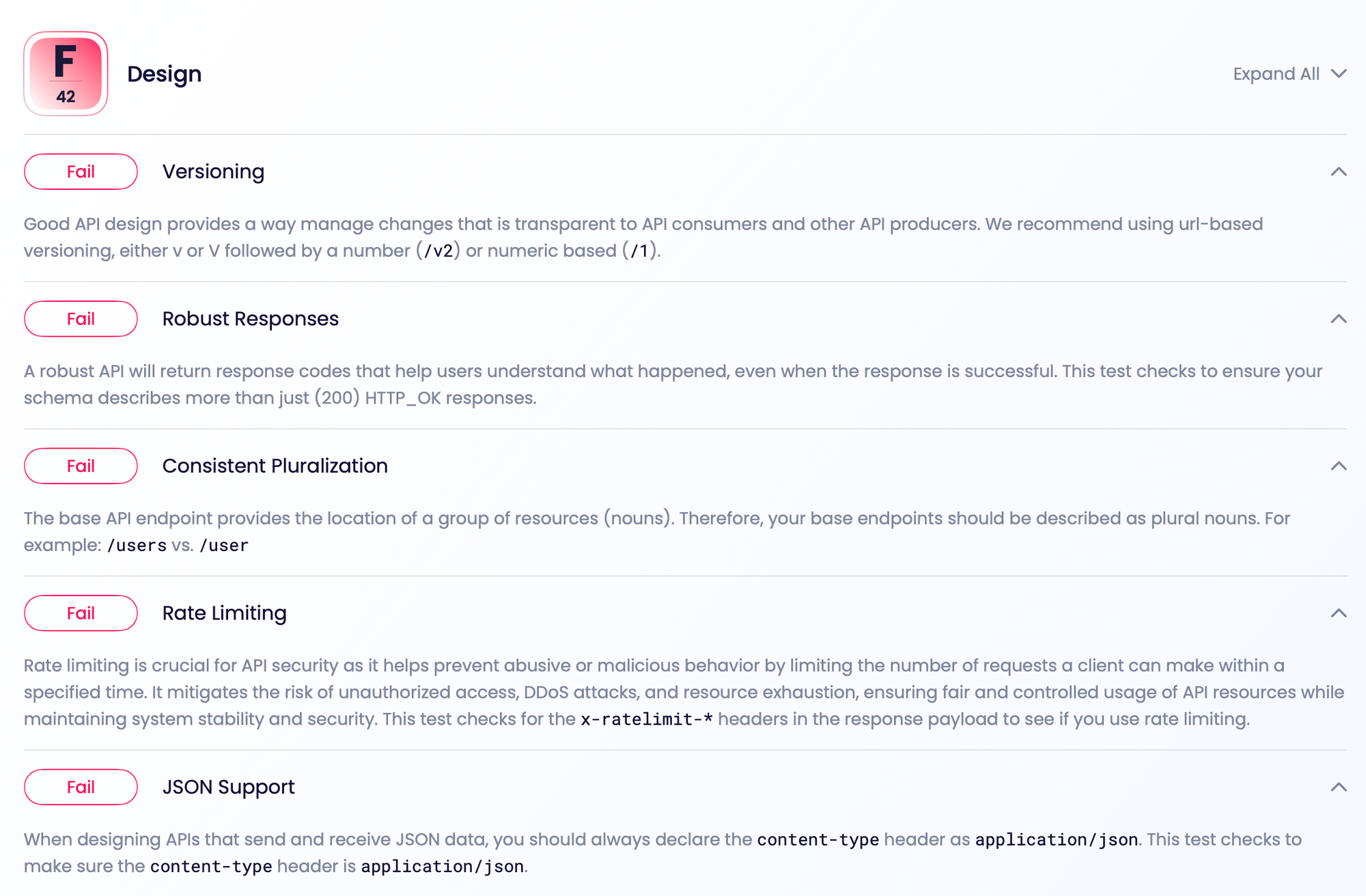

The Design section is a bit more controversial to me than these other checks.

- Consistent Noun Usage

- Consistent Pluralization

- JSON Support

- Multiple HTTP Methods

- Rate Limiting

- Robust Responses

- Versioning

It's really hard for a tool like this to try and make a single set of checks for everyone making any type of API, and API design has a lot of different opinions floating around. API Insights works by defining one set of quality metrics and doesn't have "rulesets" like other API linters, so tool needs sensible defaults and I'm not sure they've got them all right just yet.

"Consistent Noun Usage" wants more than just GET methods to be used, but if you have a read-only API you will only have GET methods. I do not think a read-only API is a bad one, but this will ding your score. A more advanced check could look for GET or POST methods with words like "delete" in the URL, summary, or operationId, and ding your score for dangerously misusing HTTP.

"Consistent Pluralization" means pluralizing your /widget to /widgets, which is not really a requirement for REST or even necessarily good practice. You might have a /me endpoint which definitely does not need to be /mes. Not sure what to suggest here.

The Rate limit is a good rule which looks at your OpenAPI to see if Rate Limiting is defined. Again it currently focuses on an outdated non-standard approach, requiring x-ratelimit-* which is a convention from Twitter and Vimeo. There's a draft web standard that attempts to standardize this with IETF Draft HTTP RateLimit Headers. Adding equal or higher score for using those headers would be a good improvement.

Finally, API versioning... this one is always going to be hell to try and write any one rule for, because API Versioning Has No "Right Way". The API I am using switched from API versioning to API Evolution (we literally removed the /v1) and so far all updates have happened in a non-breaking way thanks to changing /trees to /units with a type. API Evolution is a solid alternative to API versioning, but admittedly it's a big change for a lot of people, and API Versioning is still the predominant strategy. Whichever you prefer, should this tool tell me I am wrong for using API Evolution and prompt me to start API versioning again?

API Insights Summary

API Insights is a little different from the current field of API linters (Spectral, Redocly CLI, Optic) which focus on what they can see in the OpenAPI document, as it will go over the wire via HTTP to see what your API is up to too.

Requiring an API implementation to exist means this will likely not be a tool you use during the API Design phase, but something you use to see how your current API is doing, and give you direction for improvements to some things to consider for the next version.

Whether these checks are looking at OpenAPI or going over the wire, I think an "Explain" section would be super handy in the interface, which shows you what the tool expected, what it found (or didn't find), what it would prefer I do about it, and why. Together this Explain section would be a powerful tool for helping developers make improvements, like Google Lighthouse for APIs.

Finally, the API versioning check raises the question: should API rating tools bases their scores off of what is currently popular, or should it be more prescriptive trying to push people towards API design deemed "better". If so, who gets to decide "better"? It's not an easy line to walk but the Treblle team are only just getting started and I am excited to see how this tool evolves.

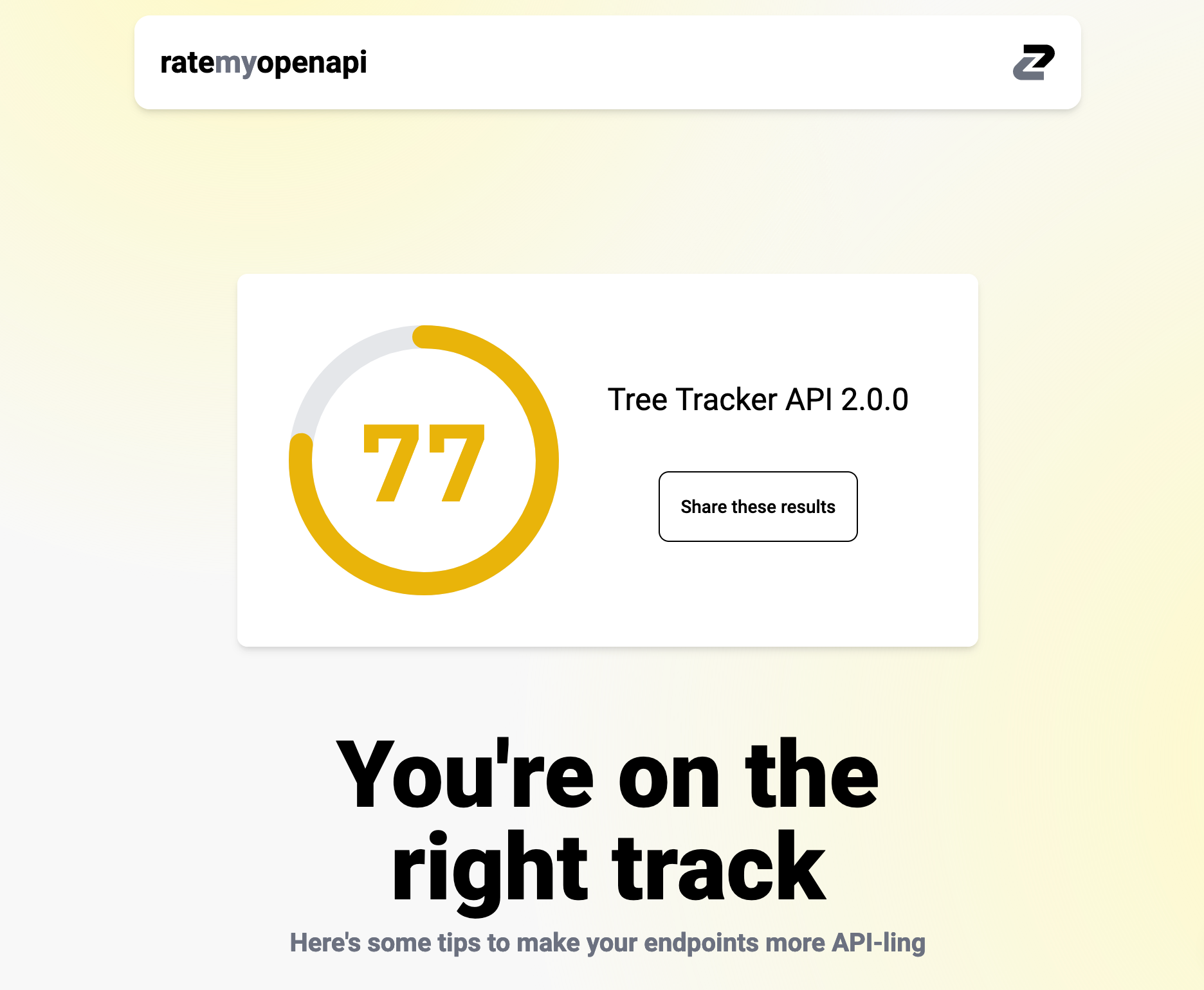

Rate my OpenAPI

Zuplo's Rate my OpenAPI tool aims to do the same sort of thiing as API Insights, and wins a point for being the first released. Just like API Insights it supports a single OpenAPI document as a file upload which will need to be bundled if you split your document into multiple components. Unlike API Insights there is no support for pointing to OpenAPI hosted on a URL, and there's no Mac app if that's something you find important.

Once you've uploaded your OpenAPI document it will ask for an email address, then it will email you the report. This only takes a minute to come through.

You will receive a score out of 100 like API Insights, but there is no A-F rating. In API Insights my API got a F (55/100), so how did this score in Rate my OpenAPI?

Straight away I'm getting advice on specifically what I need to do:

Summary

Well, well, well, looks like we've got some issues with your OpenAPI file. Brace yourself, because here's the lowdown in just 2 lines: You've got security and server problems, along with some missing error responses and rate limit issues. Oh, and let's not forget the unused components and missing descriptions. Time to roll up those sleeves and get to work!

The summary is interesting because it seems a bit more conversational, and it's pointing me to exactly which line number my first issue is on:

Alright, let's dive into the summary of issues in your OpenAPI file. Brace yourself, because we're about to embark on a snarky adventure!

Issue 1: apimatic-security-defined (1 occurrence) Oh dear, it seems like your API is missing some security definitions. You wouldn't want just anyone waltzing in and wreaking havoc, would you? Time to tighten up your security game!

Issue 2: oas3-server-trailing-slash (1 occurrence) Ah, the notorious trailing slash issue! It appears that your server URL has a sneaky little slash at the end. While it may seem harmless, it can cause confusion and lead to unnecessary redirects. Let's trim that slash and keep things tidy!

Issue 3: owasp:api3:2019-define-error-responses-500 (22 occurrences) Well, well, well, looks like your API is throwing some unhandled 500 errors. It's like a party for internal server errors, and nobody wants to attend that party! Time to define some error responses and give those 500 errors a proper send-off.

Alright, those are the top three highest severity issues from your OpenAPI file. Fixing these will give your API a solid foundation to build upon. Remember, snarky tone aside, we're here to help you create a kick-ass API!

This made me chuckle, because it reminded me a lot of Speccy, the original OpenAPI linter which I described as an automated #WellActually tool, nagging you about doing things wrong.

The names of the "issues" (e.g.: oas3-server-trailing-slash and owasp:api3:2019-define-error-responses-500) look pretty familiar, they match the names of two "rules" in the Spectral default openapi ruleset, and the spectral-owasp ruleset.

Is Rate my OpenAPI a wrapper around Spectral? I had to know, and thankfully finding out was easy. Sure I could have asked the team at Zuplo, but I didn't need to as their implementation is open-source on Github and after a quick bimble around their source code I spotted some chunks of Spectral are being pulled into the app.

"@stoplight/spectral-core": "^1.18.3",

"@stoplight/spectral-parsers": "^1.0.3",

"@stoplight/spectral-ruleset-bundler": "^1.5.2",

There's also a few references to Vaccum, a Go-based OpenAPI tool which is compatible with Spectral rulesets. Seeing these tools in there helps me understand the intentions of the tool a little more. Clearly from the name "Rate my OpenAPI" we could already tell the tool is more focused on reporting issues it can see in OpenAPI documents than it is about hitting the API over HTTP to score the implementation too, but seeing Spectral & Vacuum in there confirms that. Of course, layers could be added on top to hit the actual API, but that might be going against the intention of the name.

What does Rate my OpenAPI score your API on?

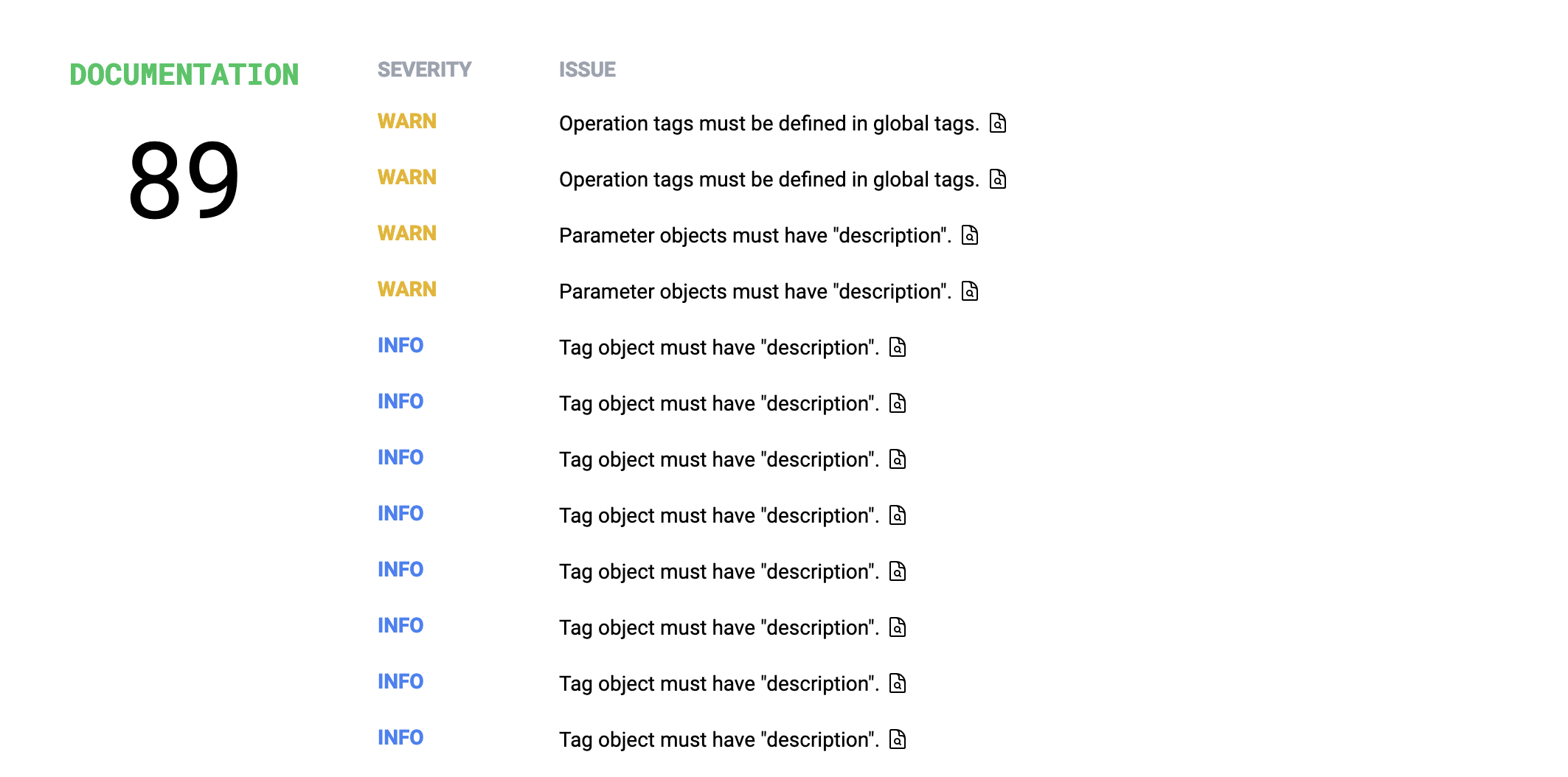

The overall rating is broken into four categories which each have a score.

- Documentation

- Completeness

- SDK Generation

- Security

First things first, documentation.

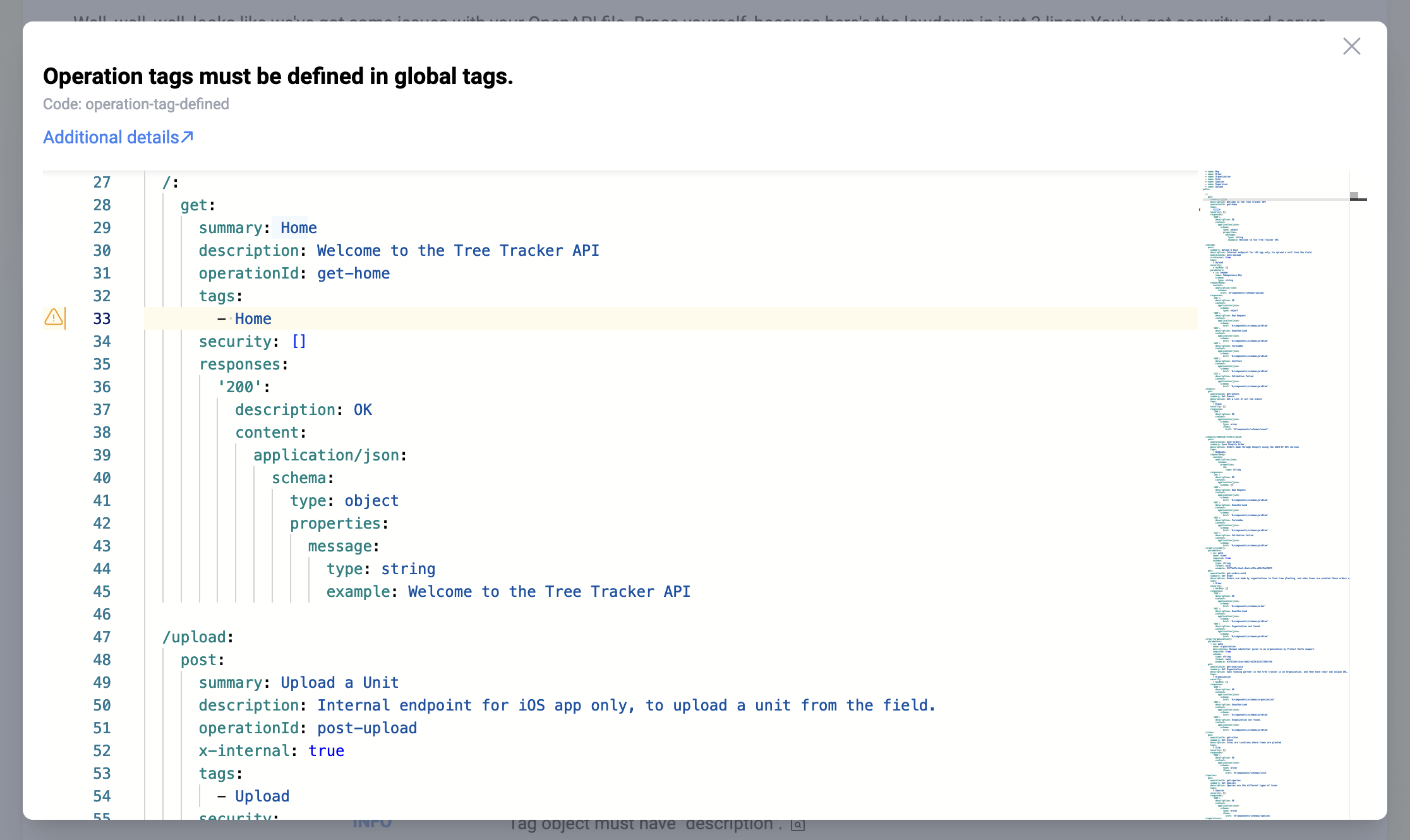

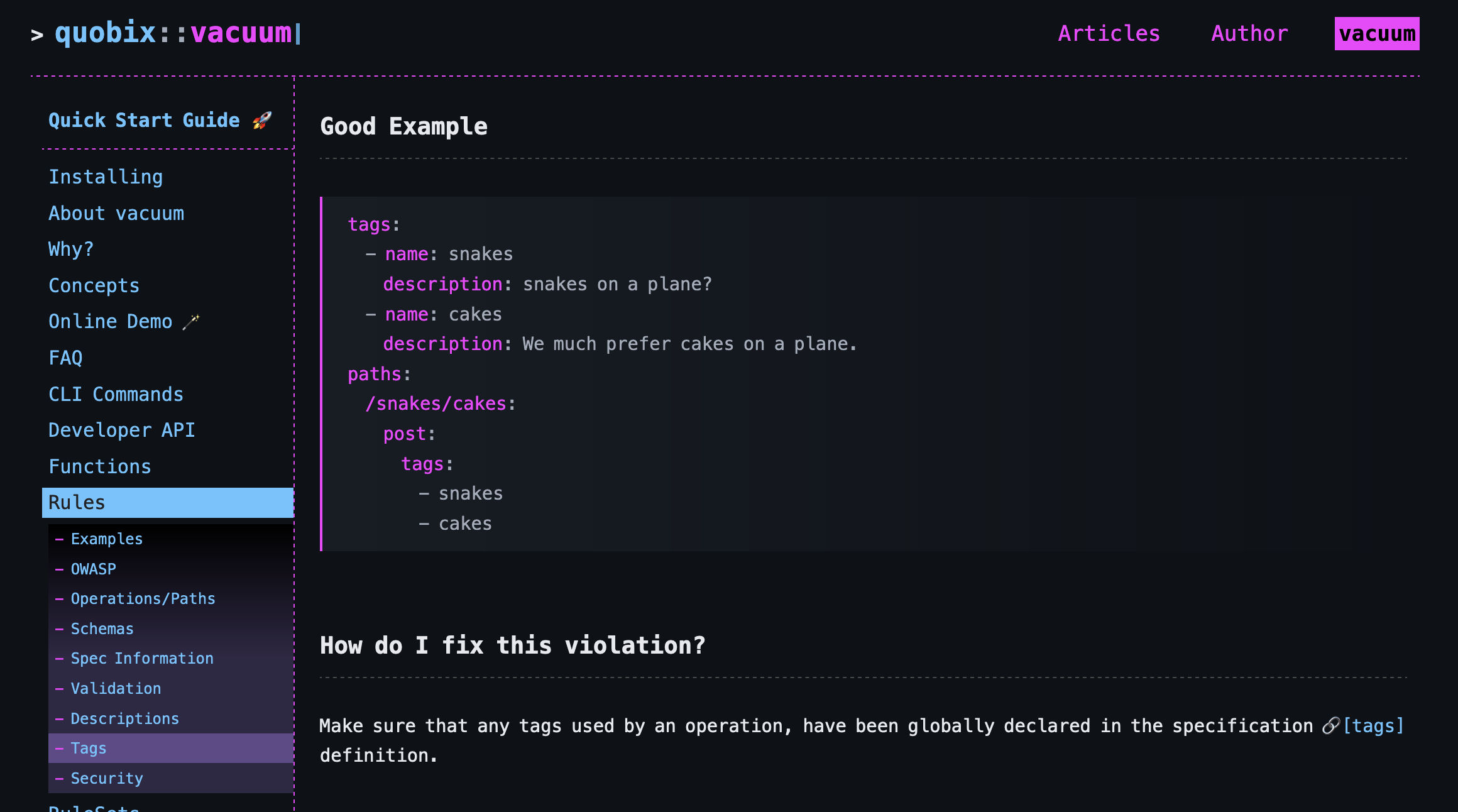

At first I thought this was the dreaded "you should use tags" rule which nobody ever liked in Spectral, but thankfully not. This is correctly pointing out that I have referenced a tag in an operation which I did not define in the global tags array. Oops!

How did I know that was the case? Well, Rate my OpenAPI told me. I can click on the 📄 icon next to the issue, and it will show me where it found it in my OpenAPI document.

Well, ok it didn't tell me exactly, it's more like it pointed to where the problem occurred, and then they have an Additional Details link which sends you off to the corresponding rule documentation in the Vacuum documentation, and that told me.

This is really helpful, and most of what I would have liked API Insights to do with an "Explain" section. Rate my OpenAPI could do a little more within the app and say something like "We expected to see Home defined as a tag in the global tags: keyword." to save you a click, but this does get you there.

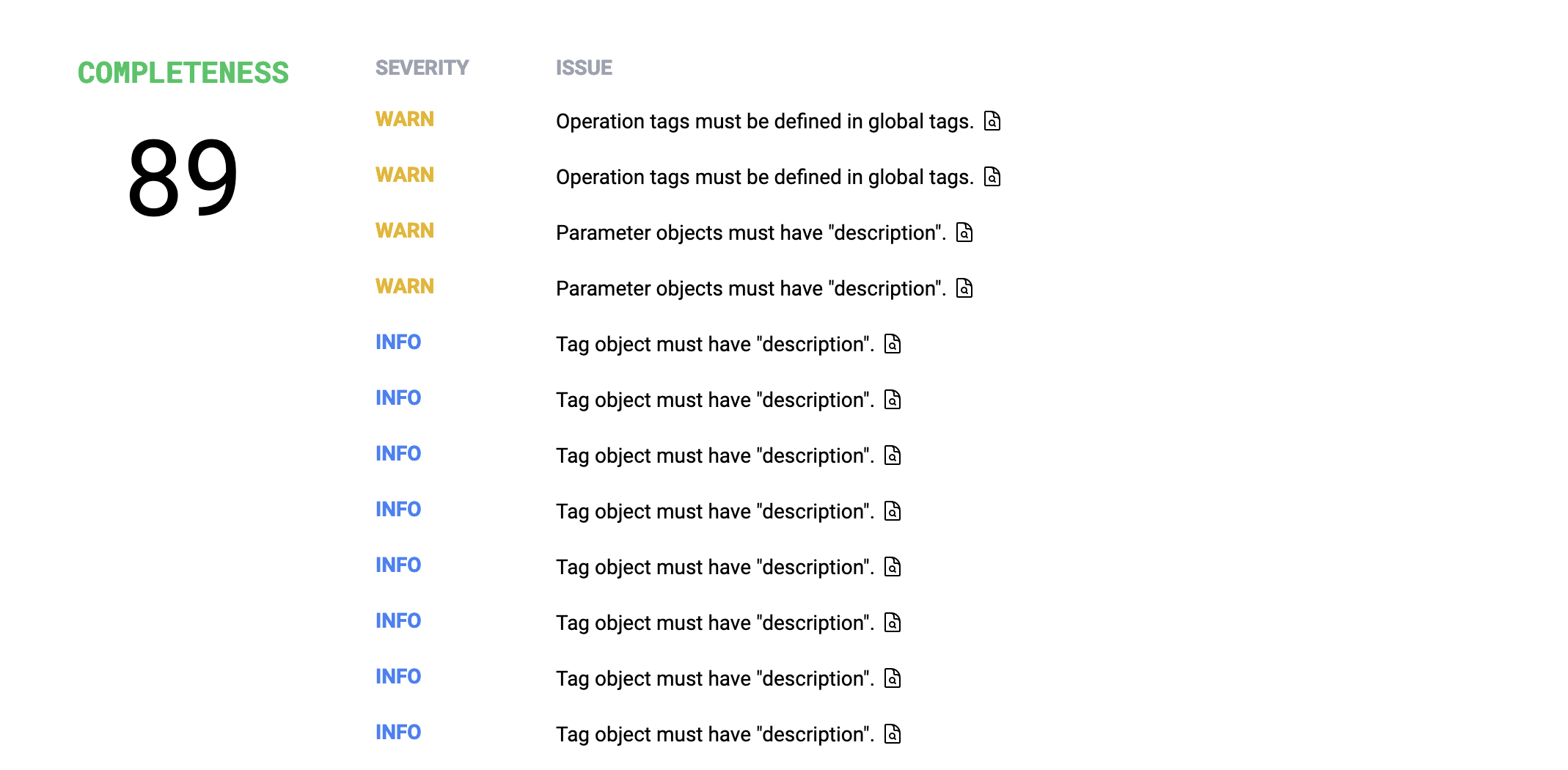

No complaints about these rules, and Completeness also didn't seem to have any problematic issues either... possibly because I use Spectral locally and had these rulesets enabled. 😅

Next!

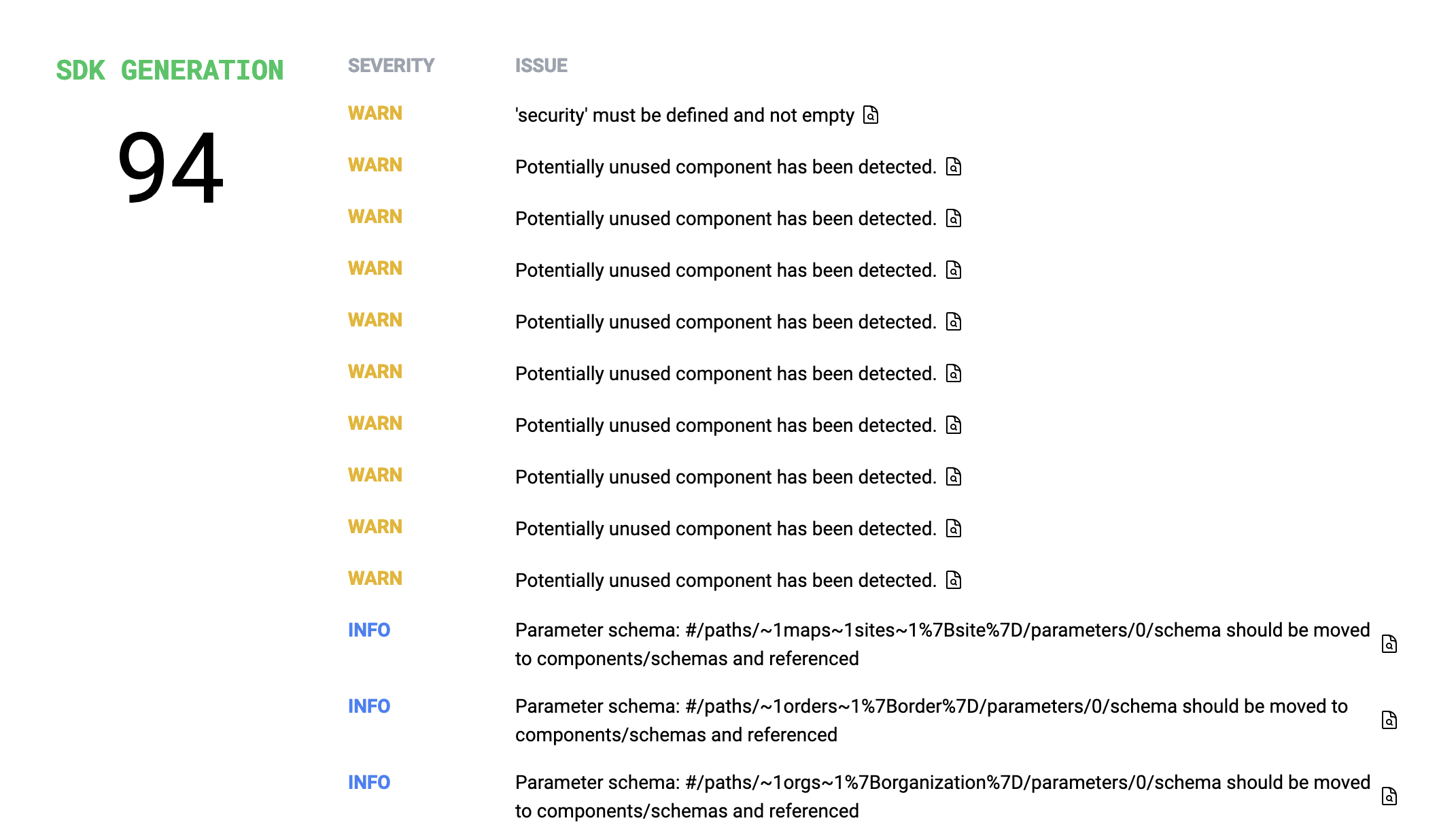

SDK Generation caught my eye.

I'm not entirely sure what these checks have to do with SDK generation.

The more information screen showed this:

Apimatic: It is recommended to define at least one authentication scheme globally using the securitySchemes property in the components section and use it globally to authenticate all the endpoints or use it for specific endpoints.

Oh hey Apimatic! We talked about their SDK generation tooling recently, but I wasn't expecting to see them pop up. It turns out Rate my OpenAPI utilized recommendations from Apimatic, and they have a rule that says:

At Least One Security Scheme

In order to protect itself from outside cyberattacks, an API should define an authentication scheme and should authenticate endpoint requests made by end users. For OpenAPI specification, it is recommended to define at least one authentication scheme globally using the securitySchemes property in the components section and use it globally to authenticate all the endpoints or use it for specific endpoints.

This seems a bit out of place. Perhaps the Security category should be giving me feedback about security, but SDK generation doesn't care. If I was feeding this OpenAPI into Apimatic to generate SDKs it would care, as from memory I think they assume you'll have some sort of security defined globally. This feels a bit disconnected and end-users of Rate my OpenAPI would only ever get this context by digging through the source code, and finding the link to that blog post in the documentation, which is probably only ever going to be me doing that.

Whether this rule belongs here or not, this is another vote for an Explain section which really explains why, instead of just what.

Potentially unused component has been detected

This rule makes a lot of sense for SDK generation. Why build code that's not actually going to be used? That's going to confuse and pollute the code documentation and have people trying to call classes and methods that don't do anything. Trim the fat, remove that OpenAPI, and keep the SDKs easy to work with.

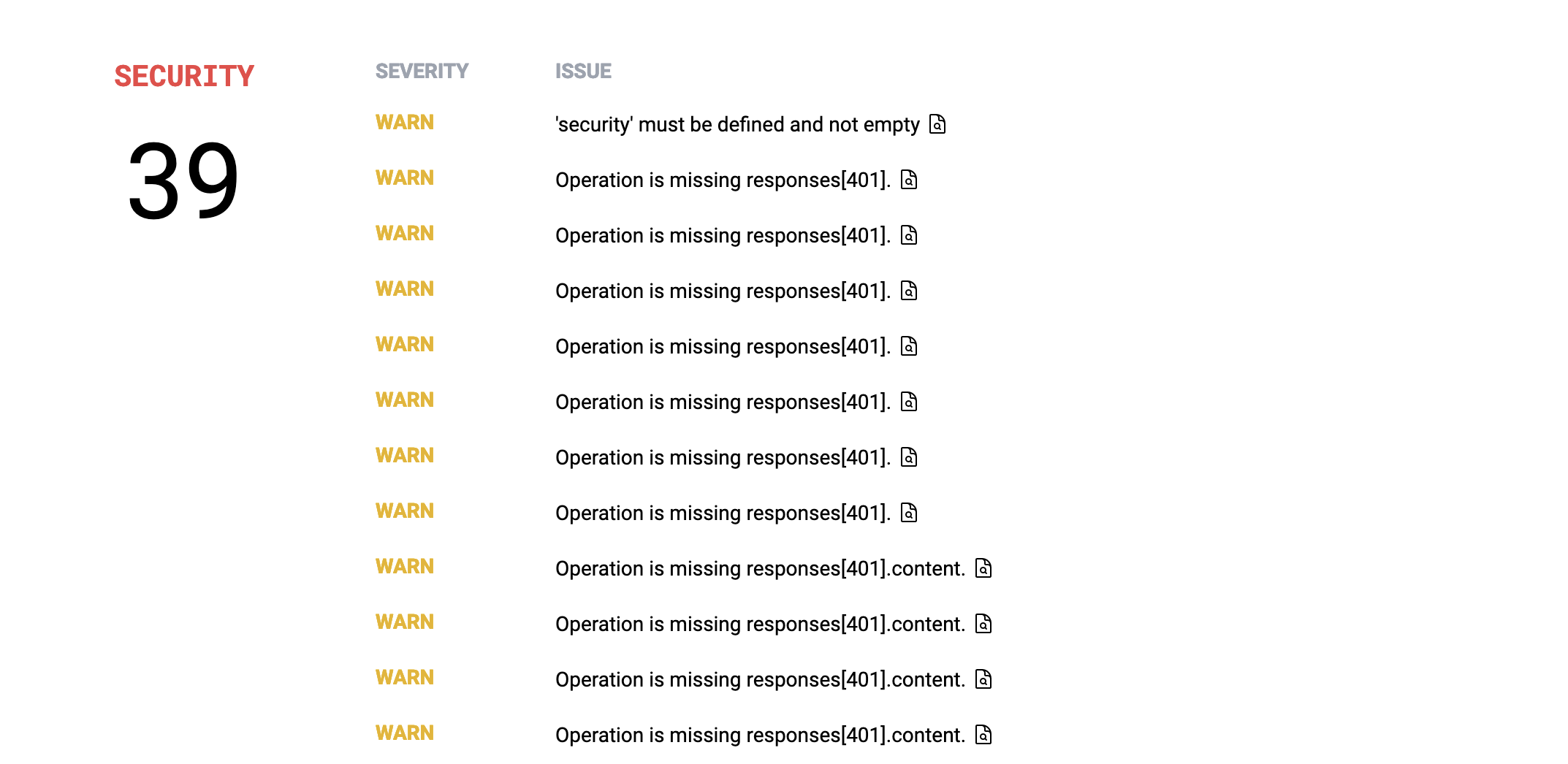

Here we go, this feels familiar. It's all based on the OWASP ruleset Stoplight had me put together before I vanished off into the woods forever.

WARN: 'security' must be defined and not empty

Heh, yeah you want to define some authorization and authentication for your API or it's going to be wide open to everyone. Sometimes you want this, and it might be a bit sad to eat the lower score for an API that is legitimately a completely public read-only API, but these tools can't do everything.

WARN: Operation is missing responses[401]. warn WARN: Operation is missing responses[401].content.

Absolutely to the first, I should be defining 401 errors in my OpenAPI and to be quite frank I have been lazy in not bothering. This is a bit redundant though, because it's telling me I have not defined how the content looks in the 401 response that I have not defined, so... we only need the first error to show up in this instance.

INFO: All 2XX and 4XX responses should define rate limiting headers.

This rule showed up in API Insights too and dinged my score saying I must have rate limiting headers set up. Rate my OpenAPI classifies it as an info and to be honest I'm not sure if that's effecting my rating or not. It feels a bit less like a demand, which you might prefer depending on how much you care about rate limiting, and whether its defined by your API or your API Gateway.

Which Tool is "The Best"?

Well I don't think I can pick one, and not because both of these tools sponsor APIs You Won't Hate. 🤣

They are fundamentally different.

API Insights sets out to rate your OpenAPI and your API implementation; in fact that abstract the difference between the two things, but they have far fewer rules and some of them may be a bit too opinionated for you. Perhaps I am too opinionated, but it definitely gave me some things to think about and the focus on pointing me towards adding security headers I had entirely forgotten to add in production was massively helpful.

Rate my OpenAPI focuses on rating your OpenAPI, and does that using a lot of very standard rules like the OWASP ruleset for Spectral. Of course I like that, I wrote it, but those rules are all based on the OWASP API Security project, and they are all things you should do. It does not mention security headers in the API implementation because it isn't looking there, but that isn't the goal.

Give them both a spin and see what you think. We welcome your thoughts and suggestions. Your feedback is key to the continuous improvement and development of these tools.